One of the main differences between autonomous mobile robots (AMRs) and traditional AGVs is that the former do not require physical references such as magnetic tapes or beacons for guidance. Therefore, AMR navigation relies on advanced technological systems that ensure intelligent and safe movement.

Navigation in mobile robotics is the technology that enables robots to autonomously move from one point to another, both indoors and outdoors. This process involves knowing their current position, building a map of the environment, planning the route to follow, and controlling their movements to reach the desired destination while performing the task they were designed for.

This article offers a general overview of autonomous navigation and mobile robotics, as well as some key technological insights into mapping and localization, all based on Robotnik’s more than 20 years of experience as a manufacturer of autonomous mobile robots.

WHAT IS AUTONOMOUS NAVIGATION IN MOBILE ROBOTICS?

In short, autonomous navigation is the ability of a mobile robot to independently move from a starting point to a predetermined destination without direct human intervention along the way. This process involves several key stages:

- Mapping: The robot creates or uses a map to understand the physical space and plan routes.

- Localization: After modeling the environment, the robot must know its exact position within it at all times.

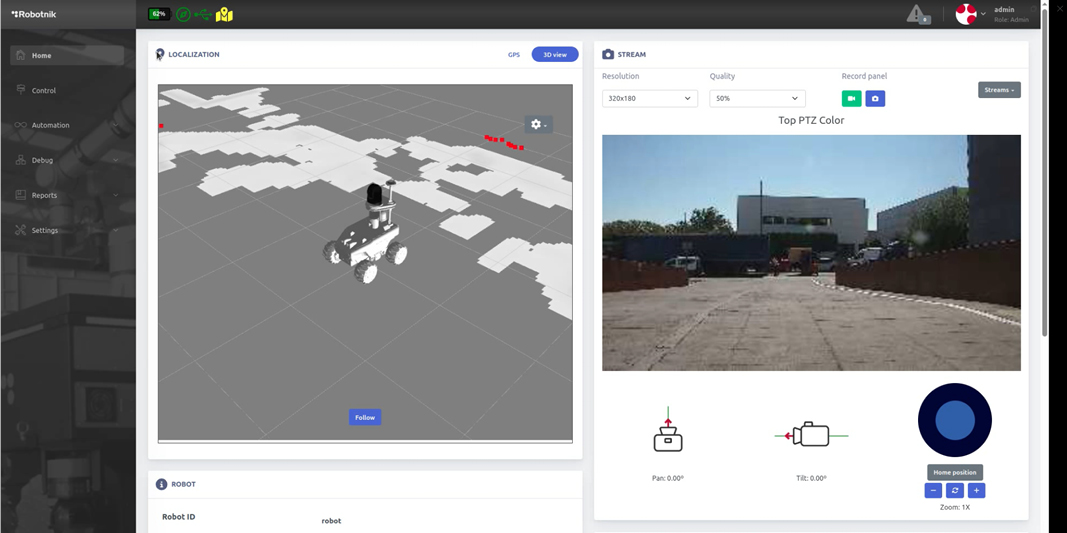

Traditionally, mapping and localization were performed sequentially, but nowadays, some manufacturers like Robotnik incorporate Simultaneous Localization and Mapping (SLAM) technology, which allows the robot to map the environment while simultaneously estimating its position.

- Path planning: Based on the map and its current position, the robot calculates the best trajectory in each case to reach its destination. Route planning becomes smarter as technologies like Machine Learning or other types of Artificial Intelligence are integrated, enabling more up-to-date decision-making based on continuous learning patterns.

- Movement and obstacle detection: The robot executes the calculated route by controlling speed, direction, and maneuvers to reach the desired point, while detecting and avoiding possible static or dynamic obstacles.

These tasks require a sophisticated combination of hardware and software, including high-precision sensors, advanced algorithms, and robust control systems.

KEY TECHNOLOGIES IN AUTONOMOUS NAVIGATION

SLAM (Simultaneous Localization and Mapping) and LiDAR (Light Detection and Ranging) are fundamental technologies in the development of autonomous navigation systems. SLAM allows a robot or autonomous vehicle to build a map of the environment while determining its own location within it — all in real time.

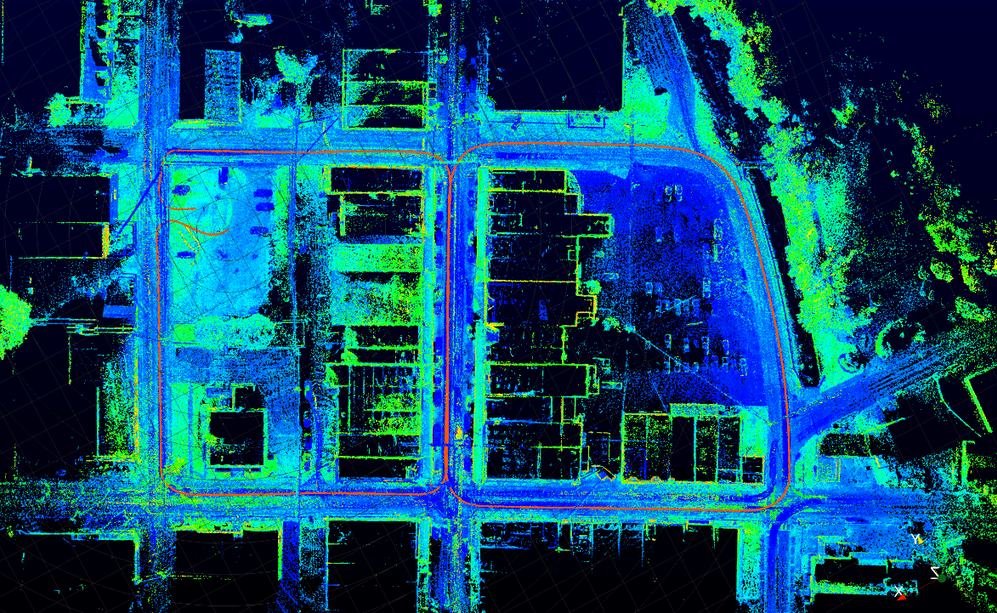

A LiDAR sensor emits light pulses to generate a point cloud of the environment, creating detailed (2D or 3D) representations of the surroundings. Combined, these technologies enable highly accurate and reliable spatial perception. Below is a closer look at each:

SLAM (SIMULTANEOUS LOCALIZATION AND MAPPING)

SLAM technology enables localization and mapping simultaneously. In other words, the robot not only maps its environment but also uses that map to determine its exact location in real time. This method is especially useful in unknown or changing environments where no pre-existing maps are available.

In robotics, 2D SLAM is commonly used, which builds maps using 2D LiDAR sensors to represent the environment in a two-dimensional space (x, y). However, Robotnik robots now include a 3D SLAM module based on ROS that allows robots equipped with 3D LiDARs to map and localize in three dimensions. Unlike 2D SLAM, which only captures data at the sensor’s height, 3D SLAM provides a complete representation of the environment, including structures at different heights. This significantly improves localization accuracy, robustness to environmental changes, and trajectory planning efficiency — thanks to the greater amount of data and the 3D sensors’ ability to avoid occlusions.

HOW DOES SLAM WORK?

SLAM acts like the robot’s eyes. It detects and collects data and information from the environment using LiDAR sensors, cameras, or IMUs (Inertial Measurement Units). This data is processed to:

- Detect features in the environment (walls, doors, furniture, obstacles, etc.)

- Create a real-time digital map based on that data

- Compare new readings with the generated map to estimate the robot's position

TYPES OF SLAM

In robotics, SLAM technology can be mainly classified into two types: visual SLAM and LiDAR-based SLAM.

- Visual SLAM (vSLAM): Visual SLAM uses cameras instead of lasers to capture visual features and build maps from them. It can be monocular (single camera), stereo (two cameras), or RGB-D (depth camera). This technique is often more cost-effective, although it may have limitations in environments with variable lighting.

- LiDAR SLAM: Uses LiDAR sensors to generate detailed maps through laser pulses. LiDAR SLAM is highly accurate and less affected by lighting conditions, making it effective in low-light or completely dark environments.

CHALLENGES IN AUTONOMOUS NAVIGATION OF MOBILE ROBOTS AND HOW WE OVERCOME THEM

Mapping, localization, and navigation are among the greatest challenges in autonomous mobile robotics, especially for AMRs designed for outdoor environments. At Robotnik, we focus much of our effort on continuously developing and improving these capabilities to offer increasingly robust, adaptive, and reliable solutions across varied scenarios.

Autonomous navigation technology faces both technical and operational hurdles, which intensify in dynamic, complex, and unstructured environments. Although there has been significant advancement in this field, many areas still offer room for improvement. Some of the most relevant challenges include:

ENVIRONMENTAL PERCEPTION

- Challenge: Robots must accurately perceive and interpret their surroundings using sensors like cameras, LiDAR, radar, and ultrasonic sensors. Variable lighting, dust, adverse weather, or reflective surfaces can degrade data quality.

- Solution: Sensor fusion, advanced processing algorithms, and AI models trained for variable conditions greatly enhance the robustness and reliability of perception.

ROUTE PLANNING

- Challenge: Designing optimal trajectories in real time is particularly complex in dynamic environments, where the robot must anticipate and react to moving obstacles like people or vehicles.

- Solution: Dynamic planning algorithms and predictive models that recalibrate routes in milliseconds, optimizing both safety and efficiency.

COLLISION AVOIDANCE

- Challenge: Detecting and avoiding obstacles in real time, especially in tight or crowded spaces, is a constant challenge. Sensor errors or processing delays can compromise system safety.

- Solution: Robots employ redundant detection systems, reactive controllers, and AI-based local planning to enable rapid, precise evasive maneuvers.

ADAPTABILITY AND LEARNING

- Challenge: Real-world environments are highly variable and unpredictable. The robot’s ability to learn and adapt to new situations is essential for safe and effective navigation.

- Solution: Modern navigation systems integrate machine learning and reinforcement learning algorithms, enabling performance improvement through experience, adaptation to environmental changes, and the acquisition of new behavioral patterns.

REAL-TIME COMPUTING

- Challenge: Autonomous decision-making requires processing vast amounts of sensor data in milliseconds, integrating perception, planning, and control without latency.

- Solution: Optimized hardware architectures (e.g., GPUs, edge computing) combined with highly efficient software enable real-time execution of mission-critical operations.

SAFETY

- Challenge: Safety is paramount, especially in collaborative environments where robots interact with humans. Any failure in navigation can endanger users and the system itself.

- Solution: Active and passive safety systems, emergency stop protocols, pre-deployment simulation, and certified behaviors ensure predictable and reliable operation even under unexpected conditions.

THE FUTURE OF AUTONOMOUS NAVIGATION IN MOBILE ROBOTS

Autonomous navigation represents one of the fundamental pillars in the development of mobile robotics, and its evolution will shape the future of multiple industrial, logistics, and service sectors. In the coming years, a significant transformation is expected, driven by the integration of more advanced technologies capable of providing robots with greater intelligence, precision, and adaptability.

One of the most promising advances is advanced sensor fusion, which combines data from LiDAR, cameras, ultrasonic sensors, and IMUs to offer a more robust and accurate perception of the environment. This integration enables robots to better interpret complex scenarios, even under adverse environmental conditions.

Another key area is the development of SLAM systems enhanced by artificial intelligence, where neural networks and deep learning algorithms optimize real-time mapping and localization. This is especially useful in dynamic and unstructured environments where traditional methods may fail.

Moreover, the future of autonomous navigation lies in multi-robot collaboration, allowing fleets of robots to share information about maps, obstacles, and routes. This cooperation not only improves operational efficiency but also opens the door to real-time coordinated tasks, such as last-mile logistics or large-scale warehouse automation.

Lastly, energy autonomy is becoming an essential factor. New smart management and charging systems will significantly extend the operational time of robots, reducing downtime and increasing productivity.

Together, these trends are shaping a new generation of mobile robots that are more autonomous, safer, and more collaborative. At Robotnik, we continuously work to integrate these innovations into our solutions, committed to delivering technologies that meet today’s demands and anticipate the challenges of the near future.

Preguntas frecuentes sobre la róbotica en la industria aeroespacial

Los robots móviles realizan tareas de alta precisión como inspecciones dimensionales, tratamientos de superficie y ensamblaje de componentes, garantizando calidad y seguridad.

Mejoran la repetibilidad, ahorran tiempo, no requieren de modificaciones en la planta y aumentan la seguridad gracias a la colaboración humano-robot.

Utilizan sistemas de escaneo 2D/3D para moverse de forma autónoma sin marcas en el suelo ni guías físicas.